Issues with Space On-Premises installation.

Hell,

I'd like to present your solution in my company, but I need to install it on my VM.

I've followed this guide (https://www.jetbrains.com/help/space/production-installation.html) and I achived such issue:

LAST SEEN TYPE REASON OBJECT MESSAGE

2m28s Warning FailedScheduling pod/jb-space-space-549ddb8bcd-6888x 0/1 nodes are available: 1 node(s) didn't match pod anti-affinity rules. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.

2m28s Warning FailedScheduling pod/jb-space-space-549ddb8bcd-l5sfj 0/1 nodes are available: 1 node(s) didn't match pod anti-affinity rules. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.

I'm on Ubuntu 22.04 with microk8s.

Do you have any ideas?

How this step "Configure the TCP proxy for the VCS Ingress, namely, configure the Ingress Controller map. If you use Kubernetes Nginx Ingress Controller, follow this guide." should be done?

Please sign in to leave a comment.

bartiszosti could you please make sure you have at least 5 worker nodes in your cluster, then try to downscale the number of replicas for all Space pods to 1, and let us know about the results?

As for your question about the Ingress Controller, could you please elaborate if you had any particular issues when going through the guide?

https://kubernetes.github.io/ingress-nginx/user-guide/exposing-tcp-udp-services/

Hello Pavel,

thanks for your answer.

I had only one worker, but now I'm have 5 workers in the cluster.

I also added to the values.yaml

It helped, but now my pods got stuck in the "Init" state.

During initialization I had only this warnings

About Ingress Controller. The guide you sent is clear, but I have no idea which service and which port should I add to the ConfigMap. Could you tell me that? I feel I'm close to run it. It's last thing that I didn't do.

Hey bartiszosti,

if it's only for presentation purposes, you can edit the deployments and delete the antiaffinity settings:

find and delete the following lines:

Just another question, did you install postgresql, redis, elasticsearch and minio beforehand, or do you already have those services?

Hi Okay, I didn't have these services.

Currently, I have another issues...

1. The login button doesn't work. If I enter credensials and click the button nothing will happen, but after opening the space in another tab I'm on the on-boarding page. Why?

2. I'm not able to upload the company logo during on-boarding nor the user's profile picture. I always have "Something went wrong. If the problem persists, please contact the Space support team." or something about logs from server. Why? How can I get these logs?

Another question, can I have only two workers? I have only two physical machines so it have no sense to dupliate workesr on the same machine.

bartiszosti, for the sake of a better order, could you please create separate support requests here? The following information would be useful to start investigations:

Case 1: Are there any errors in the browser console and network tabs? Is Space installation configured behind any reverse proxy? Do you use self-signed or CA certificates?

Case 2: Please open the browser console and check if there are any errors there. Also, check for any errors in Space and MinIO pods.

As for the number of worker nodes, please note they could be virtual and hosted on a single machine. Below is the link to the docs with more details about k8s nodes:

https://kubernetes.io/docs/concepts/architecture/nodes/

Hello,

Of course I can create the order but so far the support here is great.

Of course I can create the order but so far the support here is great.

I found the issue. When I was trying to login I saw the error about mixed content http and https. The solution is to delete the space.altUrls value. I had there http adress. I have no idea why it took this url.

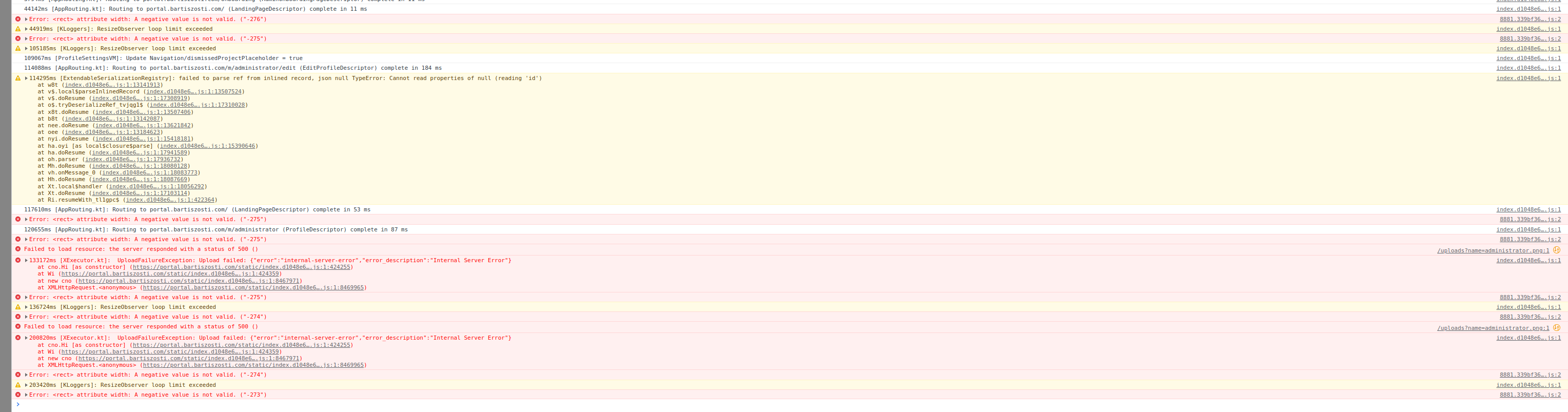

The secon issue still exist. When I trying to upload company's logo and user's profile picture I can see such output.

bartiszosti, glad to hear you managed to fix this issue. As for the issue with uploading images, please reproduce it once again and then provide the logs from the Space and MinIO pods. The command should be `kubectl -n ${namespace} logs ${pod_id}`.

Hello,

the issue was that I didn't create buckets in the MinIO.

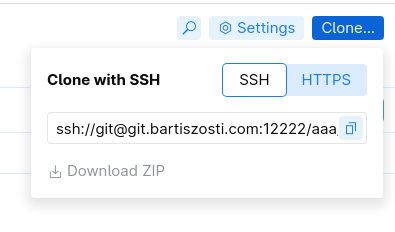

Of course, I have another question. How to run the vsc ssh server on port 22?

I moved the server ssh server on different port (10022), changed the vcs.service.ports.ssh in the values.yaml to 22 and ...

Another question. I have such services created by space.

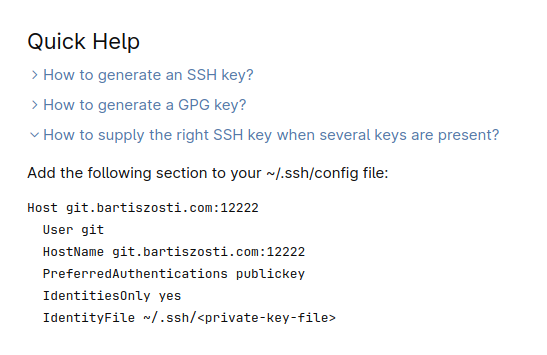

I know I must add to the nginx-ingress-tcp-microk8s-conf the space-vcs service to expose the SSH server service. So far I use below file, but I'm not sure whar service should I use space-vcs or space-vcs-ext. Could you tell me that? If the space-vcs-ext - how the below file should look?

The next step to expose the SSH server is to add a record in the nginx-ingress-microk8s-controller. I do it by this below command, but do you know better method to do so? This tutorial doesn't work as expected -> https://microk8s.io/docs/addon-ingress.

kubectl patch ds -n ingress nginx-ingress-microk8s-controller --type='json' -p='[{"op": "add", "path": "/spec/template/spec/containers/0/ports/-", "value":{"containerPort":12222,"name":"space-vcs-ssh","hostPort":12222,"protocol":"TCP"}}]'bartiszosti, space-vcs is the correct service name. Instead of patching the ConfigMap directly, we'd recommend editing it through the values.yaml file of the Nginx Ingress Controller Helm Chart (at the same time, the best point of contact would be microk8s project maintainers).

If I'm not mistaken, you're trying to change both internal and external ports. If that's true, please note that the internal one is defined by the app and shouldn't conflict with any others. It means it'd be enough to specify an external port only (22222 in the example below):

Thanks for you answer.

You're right but only in a half, because your solution will enable connection on the external port, but on the website the address will be with the internal one.

What is the purpose of the space-vcs-ext service?

bartiszosti, oh, I see. Could you now change the `vcs.service.ports.ssh` parameter to your external port value? As for `space-vcs-ext` service, it could be disabled with `vcs.externalService.enabled` set to false.

The change have the same effect as I described before.

The container have no permission to use port 22.

Do you know how to do that?

bartiszosti could you please clarify how did you apply these changes? Please try to scale the pods down to 0, apply changes with helm upgrade, then scale the pods up back.

Hello.

I did it using below commands.

Finally, I managed to bind the SSH server to the port 22.

Unfortunatelly, I had to run container as a root user, which isn't good and add the NET_BIND_SERVICE cabability.

I read in the Internet that adding the NET_BIND_SERVICE cabability should be enough, but not here. Could you tell me why?